LLM Report

About LLM Report

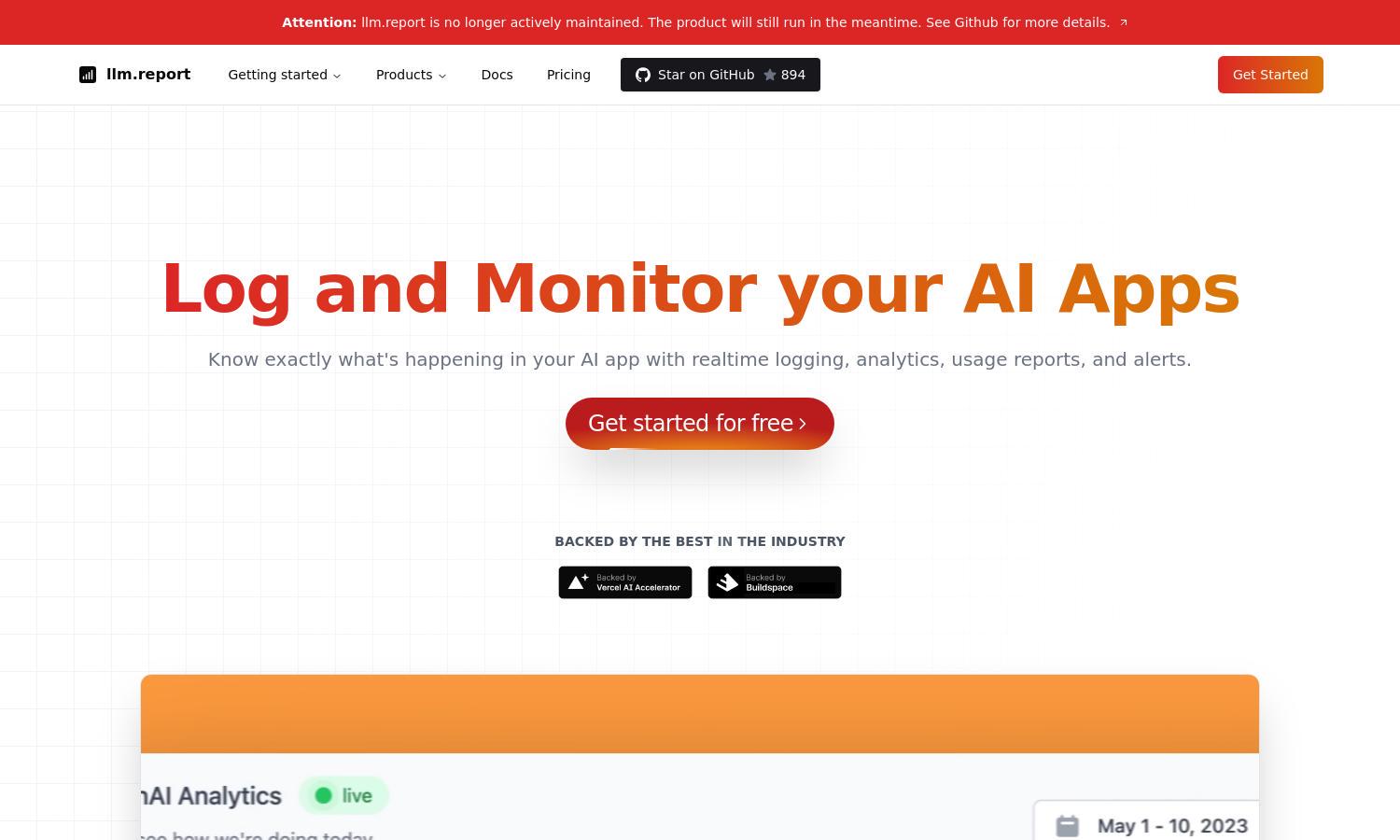

LLM Report specializes in real-time logging and analytics for AI applications. It offers users the ability to gain insights into their OpenAI usage, enabling cost optimization and tracking of API interactions seamlessly. With its intuitive dashboard, it caters to developers and businesses focused on efficiency and performance.

LLM Report offers a free plan with basic features and a premium subscription that unlocks advanced analytics and optimization tools. Users benefit from detailed usage reports and alerts for a monthly fee, making it easier to manage costs associated with OpenAI services effectively.

LLM Report features a sleek, user-friendly interface designed for seamless navigation among its various functions. Its dashboard allows easy access to key insights and analytics, making it intuitive for users to track their AI application performance and optimize token usage efficiently.

How LLM Report works

Users start by signing up for LLM Report, where they input their OpenAI API key to connect their account. They can then view real-time data on API usage, track costs, and receive immediate feedback on their prompts and completions. The dashboard presents detailed insights, enabling users to identify trends and optimize their usage for better efficiency.

Key Features for LLM Report

Real-Time Analytics

LLM Report delivers real-time analytics, allowing users to monitor their OpenAI usage as it happens. This feature helps identify inefficiencies and optimize costs effectively, providing crucial insights that help users make informed decisions to maximize their AI application performance.

Cost Tracking

One standout feature of LLM Report is its cost tracking capability, which visualizes expenses associated with OpenAI API usage. This functionality enables users to measure cost per user, adjust pricing strategies, and take control of their overall expenditure with ease.

User Insights

LLM Report offers detailed user insights that help businesses understand how their AI applications perform. By analyzing prompt usage, token consumption, and user behavior, this feature equips users with actionable data to enhance their strategies, driving better outcomes and efficiency.