About Agenta

Agenta is the open-source LLMOps platform engineered to accelerate AI teams from chaotic experimentation to reliable, shipped applications. It directly tackles the core inefficiency plaguing LLM development: unpredictability. When prompts are scattered across emails and sheets, teams work in silos, and deployments are based on gut feelings, shipping becomes a gamble. Agenta eliminates this guesswork by providing a unified, collaborative hub for the entire LLM lifecycle. It's built for cross-functional teams of developers, product managers, and domain experts who need to move fast without breaking things. The core value proposition is speed through structure. By centralizing prompt experimentation, automated evaluation, and production observability into one integrated workflow, Agenta transforms fragmented processes into a streamlined pipeline. This means you can iterate on prompts with real data, validate every change with evidence-based evaluations, and instantly debug issues using detailed traces. The result is a dramatic reduction in time-to-production for LLM-powered features, with significantly higher confidence in their performance and reliability. Agenta is model-agnostic and framework-friendly, designed to integrate seamlessly into your existing stack without vendor lock-in, making it the essential infrastructure for teams serious about building robust AI products.

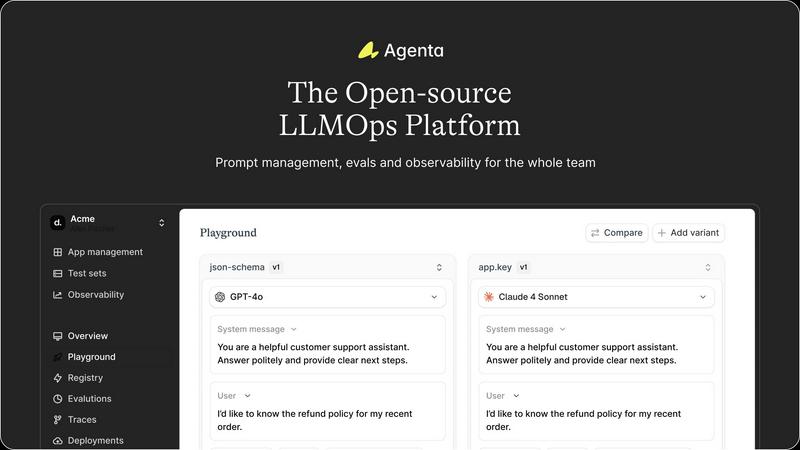

Features of Agenta

Unified Experimentation Playground

Accelerate prompt and model iteration with a centralized playground designed for speed. Compare multiple prompts, parameters, and models from different providers side-by-side in real-time. Every experiment is automatically versioned, creating a complete audit trail of changes. This eliminates the chaos of scattered scripts and documents, allowing developers and domain experts to collaborate directly on refining LLM behavior using actual production data and saved test cases.

Automated Evidence-Based Evaluation

Replace subjective "vibe checks" with systematic, automated testing to validate performance. Agenta lets you integrate any evaluation method, including LLM-as-a-judge, custom code, or built-in metrics. Critically, you can evaluate the full reasoning trace of complex agents, not just the final output, pinpointing exactly where failures occur. This creates a fast, repeatable process to ensure every prompt update or model switch genuinely improves your application before it ships.

Production Observability & Debugging

Gain instant visibility into your live LLM applications with comprehensive tracing. Every user request is logged, allowing you to drill down into the exact failure points in complex chains or agentic workflows. Turn any problematic trace into a regression test with a single click, closing the feedback loop at lightning speed. Monitor system health and detect performance regressions automatically with live evaluations running directly on production traffic.

Collaborative Workflow for Cross-Functional Teams

Break down silos and enable your entire team to contribute to AI development. Agenta provides a safe, intuitive UI for domain experts and PMs to experiment with prompts and run evaluations without writing code. With full parity between the UI and API, programmatic and manual workflows integrate seamlessly into a single source of truth. This collaboration ensures the people with the domain knowledge can directly shape and validate the AI's behavior, dramatically speeding up the refinement cycle.

Use Cases of Agenta

Rapid Prototyping and Iteration of LLM Applications

Accelerate the initial build phase of chatbots, copilots, or content generators. Teams can use the unified playground to swiftly test different foundational models and prompt strategies using real-world data. The version history allows for quick rollbacks and A/B testing, enabling a fast-paced experimentation cycle that moves from idea to a working, evaluated prototype in record time.

Systematic Performance Validation Before Deployment

Establish a robust gating mechanism for all production changes. Before deploying a new prompt or model, teams can run it through a battery of automated evaluations against benchmark datasets. This evidence-based approach ensures that every update meets quality standards, preventing performance regressions and building stakeholder confidence for rapid, yet safe, deployment cycles.

Debugging Complex Production Issues in Agentic Systems

When a multi-step AI agent fails in production, traditional logging is insufficient. Agenta's trace observability lets engineers instantly visualize the entire execution path, identifying the specific step (e.g., a tool call or reasoning step) where the error occurred. This transforms debugging from a days-long investigation into a minutes-long diagnosis, drastically reducing mean time to resolution (MTTR).

Enabling Domain Expert-Led Prompt Optimization

Empower subject matter experts (e.g., legal, marketing, support) to directly refine AI behavior. Through the collaborative UI, experts can adjust prompts for nuance, accuracy, and brand voice without developer intermediation. They can then immediately run evaluations to see the impact of their changes, creating a tight, efficient feedback loop that leverages deep domain knowledge at the speed of software.

Frequently Asked Questions

Is Agenta really open-source?

Yes, Agenta is fully open-source. You can view the source code on GitHub, self-host the platform on your own infrastructure, and contribute to its development. This provides full transparency, avoids vendor lock-in, and allows for deep customization to fit specific enterprise needs and security requirements.

How does Agenta integrate with existing LLM frameworks?

Agenta is designed for seamless integration. It works natively with popular frameworks like LangChain and LlamaIndex, and is model-agnostic, supporting OpenAI, Anthropic, Azure, open-source models, and more. You can typically integrate Agenta's SDK with a few lines of code, allowing you to add experimentation, evaluation, and observability without rewriting your application.

Can non-technical team members really use Agenta effectively?

Absolutely. A core feature of Agenta is its collaborative UI built for cross-functional teams. Product managers and domain experts can use the intuitive web interface to edit prompts, compare experiment results, and launch evaluations without any coding knowledge. This bridges the gap between technical implementation and domain expertise.

What kind of evaluations can I run on the platform?

Agenta supports a highly flexible evaluation system. You can use LLM-as-a-judge setups, implement custom code-based evaluators for precise metrics, or use built-in evaluators for common tasks. You can evaluate final outputs, but more importantly, you can evaluate intermediate steps in an agent's reasoning trace, which is critical for debugging complex applications.

You may also like:

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams

My Deepseek API

Affordable, Reliable, Flexible - Deepseek API for All Your Needs