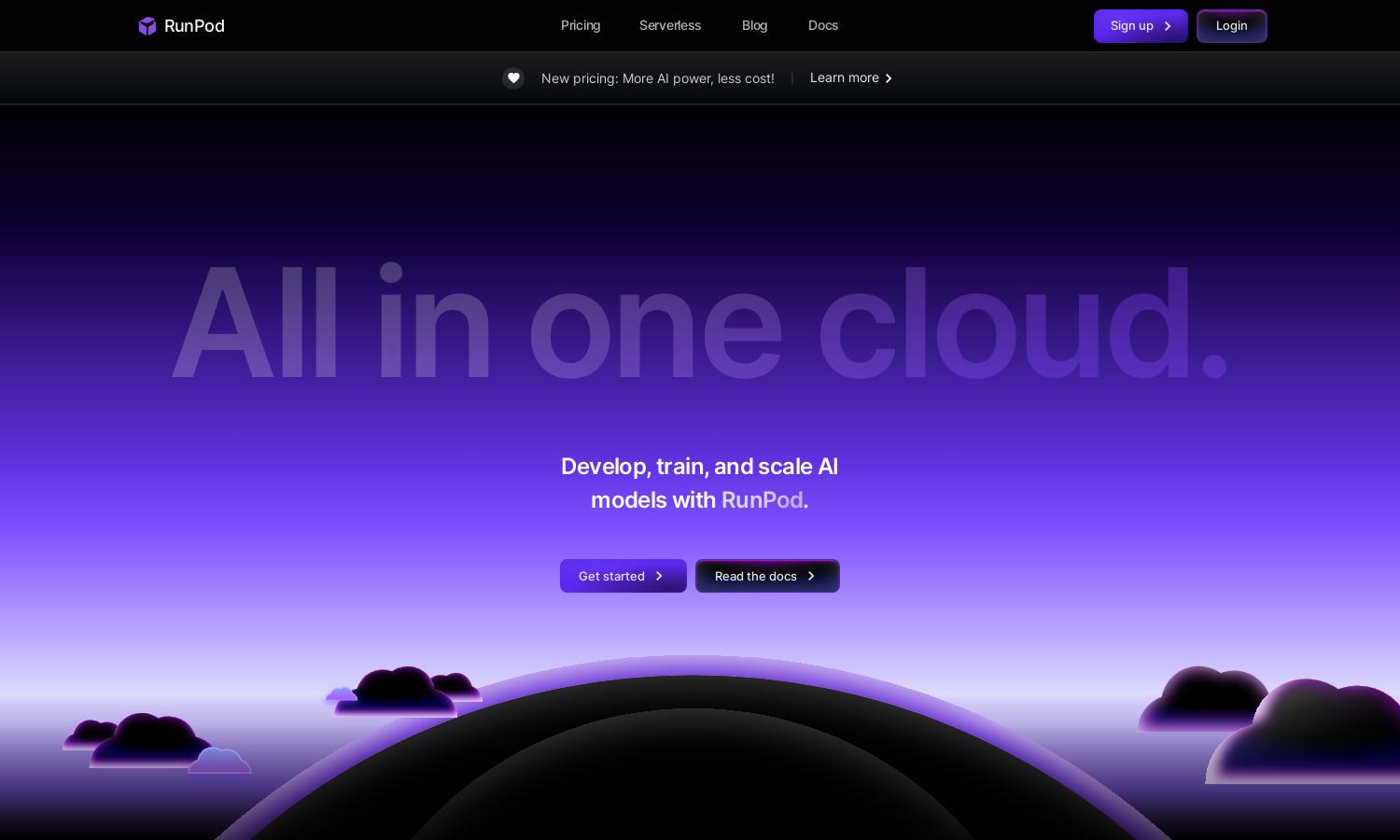

RunPod

About RunPod

RunPod is an innovative cloud platform that enables users to develop, train, and scale AI models seamlessly. Targeting startups, academic institutions, and enterprises, it offers on-demand GPU resources and a user-friendly interface that allows for quick model deployment and management, solving infrastructure challenges effectively.

RunPod offers flexible pricing plans to suit various needs, starting from as low as $0.22/hr for the RTX 3090. Users benefit from dedicated GPU services with options for auto-scaling and additional resources at competitive rates, making it affordable for AI training and inference workloads.

RunPod features an intuitive interface that enhances user experience through its streamlined layout. With quick access to essential tools and resources, users can effortlessly navigate, set up AI models, and monitor workloads. Innovative features support a seamless browsing experience tailored for efficient AI development.

How RunPod works

Users begin their journey with RunPod by signing up and selecting GPU resources based on their needs. They can choose from various templates or deploy their custom containers. The platform handles scaling automatically, allowing users to focus on training and deploying models, while providing real-time analytics and logs to track performance.

Key Features for RunPod

On-Demand GPU Scaling

RunPod’s on-demand GPU scaling feature allows users to easily adjust their GPU resources in real-time, ensuring they only pay for what they use. This capability enhances efficiency and cost-effectiveness, making RunPod the perfect choice for developers focusing on machine learning workloads.

Serverless Inference

The serverless inference feature of RunPod empowers users to deploy AI models that automatically scale based on demand. This ensures low latency and optimal performance during high traffic periods, making RunPod an excellent choice for delivering fast, efficient machine learning applications.

Lightning-Fast Cold Start

RunPod's lightning-fast cold start feature drastically reduces startup times for GPU pods, allowing users to launch applications within milliseconds. This efficiency empowers developers to respond quickly to fluctuating workloads and provides a significant advantage in dynamic AI environments.

You may also like: